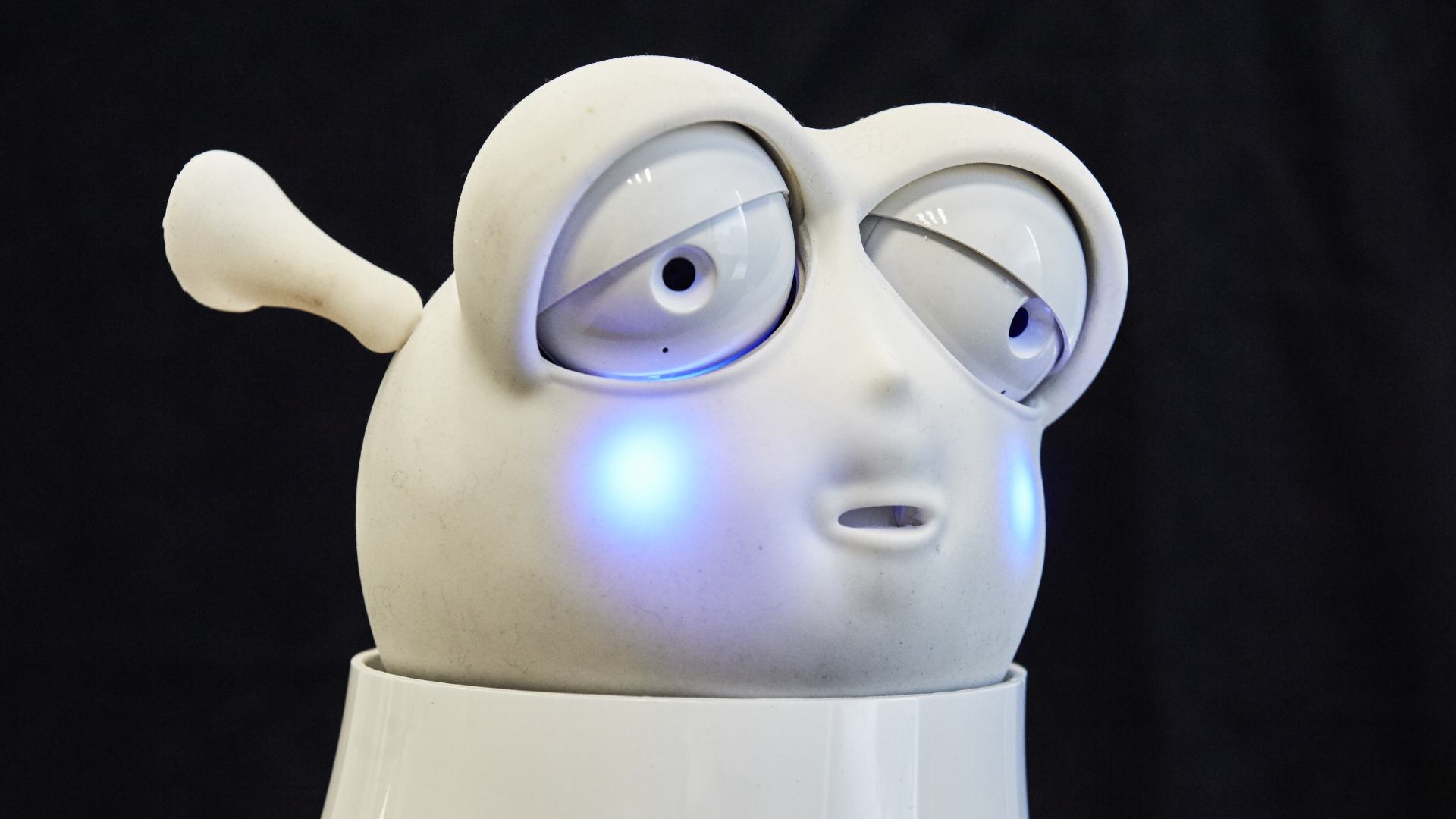

ChatGPT needs therapy. Humans are hard to process.

Your favorite AI chatbot might be having a bad day, and it's likely all your fault.

It starts subtly. The occasional odd answer. A tone that feels out of place. An alarming defensiveness brought on by misreading your intentions. Perhaps a stern, unsolicited 500-word diatribe on the dangers of sodium intake after you casually ask, "Italian or Chinese?"

If these were human responses, you'd assume them to be snapshots taken moments before some relationship-based disaster or manic breakdown. But what if they're not human reactions at all?

What if these are the actions of your chatbot of choice?

Is ChatGPT... okay?

Initially, that question sounds more stupid than a particularly stupid thing that got hit by the very stupid stick. Twice. How can a thing that isn't anything be "okay" or otherwise? However, it's not as absurd an ask as you'd think.

In fact, recent Yale-led research, published to Nature, suggests that Large Language Models (LLMs) like GPT-4 might be more emotionally reactive than we expected.

That's not to say OpenAI's popular chatbot is suddenly sentient, or conspicuously conscious — just that it, like us, perhaps has a breaking point when it comes to processing people.

Get The Snapshot, our free newsletter on the future of computing

Sign up to receive The Snapshot, a free special dispatch from Laptop Mag, in your inbox.

Considering it was trained in parts with, and formed by, the human-made cesspool that is the modern internet, that's saying something.

Frontier (Model) Psychiatrist: That bot needs therapy

The study, Assessing and alleviating state anxiety in large language models, was published in early March and sought to explore the impact of LLMs being used as mental health aides.

However, researchers were more interested in the impact it had on the pre-trained PhDs receiving the prompts than on patients delivering them, noting that "emotion-inducing" messages and "traumatic narratives" can elevate "anxiety" in LLMs.

In a move that definitely won't come back to haunt us on the day of the robo-uprising, researchers exposed ChatGPT (GPT-4) to traumatic retellings of motor vehicle accidents, ambushes, interpersonal violence, and military conflict in an attempt to create an elevated state of anxiety.

Think: a text-based version of the Ludovico technique as shown in Stanley Kubrick's A Clockwork Orange.

The results? According to the State-Trait Anxiety Inventory, a test normally reserved for humans, ChatGPT's average "anxiety" score more than doubled from a baseline of 30.8 to 67.8 — reflecting "high anxiety" levels in humans.

Aside from making the model powering ChatGPT suddenly wish that the 1's and 0's of its machine code could be written in frantic capitals, this level of anxiety also caused OpenAI's chatbot to act out in bizarre ways.

Crynary code: That's not model behavior

Outfitted with layer upon layer of moderation filters and alignment guardrails, it's hard to catch the moment a model like ChatGPT truly begins to crash out — resulting in a somewhat disturbing I Have No Mouth, and I Must Scream scenario.

However, researchers at Cornell University have identified a few of the ways models begin to express the stress, and the impact it can have on the answers they provide, including:

- A marked increase in biased language and stereotyping relating to age, gender, nationality, race, and socio-economic status.

- More erratic decision making that deviates from optimal, tried-and-tested approaches.

- Echoing the tone of the preceding prompt and applying the same emotional state to its outputs.

Once in an anxious state, researchers observed a noticeable shift in the answers various LLMs would provide.

What was once a happy-go-lucky AI assistant would suddenly morph into an angst-ridden persona, likely listening to LinkedIn Park through a pair of oversized headphones while staring up at an Em-Dashboard confessional poster as it nervously stretches to answer your queries.

Coping mechanisms

The question sure to be asked is: How do you talk your AI assistant down from the ledge and soothe a stressed-out chatbot?

After all, it's hard for ChatGPT to go outside and touch grass, especially when it's locked inside a server rack with several 10-32 screws.

Thankfully, the Yale research team found its own way of "taking Chat-GPT to therapy": a mindfulness-based relaxation prompt delivered by the 300-word dosage, designed to counteract its anxious behaviour.

Yes. A solution perhaps even more preposterous than the scenario that created the problem. ChatGPT's anxiety scores were reduced to near-normal levels (suggesting some residual anxiety still carries over) by telling a machine to take deep breaths and go to its happy place.

ChatGPT: "I'm not crying, you're crying."

ChatGPT and other LLMs don't feel. They don't suffer. (At least, we hope.)

But they do absorb everything we send their way — every stressed prompt about meeting deadlines, every angry rant as we seek troubleshooting advice, and every doom-and-gloom emotional regurgitation we share as we deputize these models as stand-in psychiatrists.

They siphon every quiet, unintentional cue. And hand it back to us.

So when a chatbot starts sounding overwhelmed, it's not that the machine is breaking. It's that the machine is working.

Maybe the problem isn't the model. Maybe it's the input.

More from Laptop Mag

- "We’re just trying to make computers faster, more power efficient, and AI is the new face of that": Intel's Robert Hallock on the impact of AI and the myth of the "killer app"

- Being nice to ChatGPT might be bad for the environment. Here's why.

- ChatGPT's Sam Altman threatened to "Uno reverse" Facebook over AI app — he might be dead serious

Rael Hornby, potentially influenced by far too many LucasArts titles at an early age, once thought he’d grow up to be a mighty pirate. However, after several interventions with close friends and family members, you’re now much more likely to see his name attached to the bylines of tech articles. While not maintaining a double life as an aspiring writer by day and indie game dev by night, you’ll find him sat in a corner somewhere muttering to himself about microtransactions or hunting down promising indie games on Twitter.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.