Never Take an Out-of-Focus Picture Again: Adobe's New Plenoptic Lens Tech

After giving a brief demonstration during the keynote address at Nvidia's GPU Technology Conference, Adobe went into more detail about computational photography using plenoptic lenses, a method of taking pictures so that any part of a photo can be brought into focus after the fact.

While the technology is still a good ways from being commercialized, it's an interesting look into the future of photography.

By using a bunch of tiny lenses and some rendering software, users will be able to select what they want in focus, even after the photo has been taken. This technology will let users create 3D images on the fly as well.

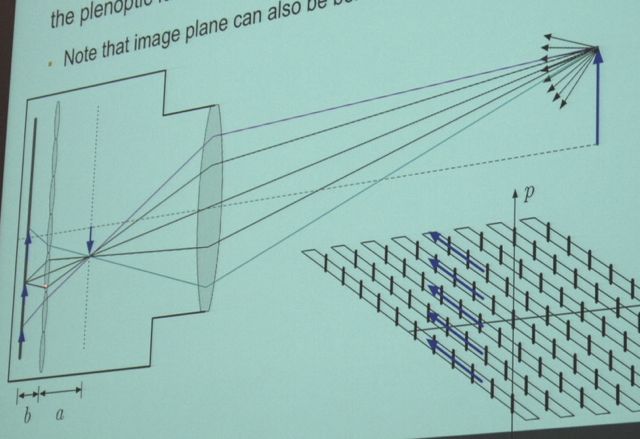

The way it works is this: Adobe placed a plenoptic lens--basically, hundreds of tiny lenses crammed together--in between a camera's lens and the image sensor. In this case, they used a medium-format camera and a digital back, probably because it afforded more space than a smaller DSLR.

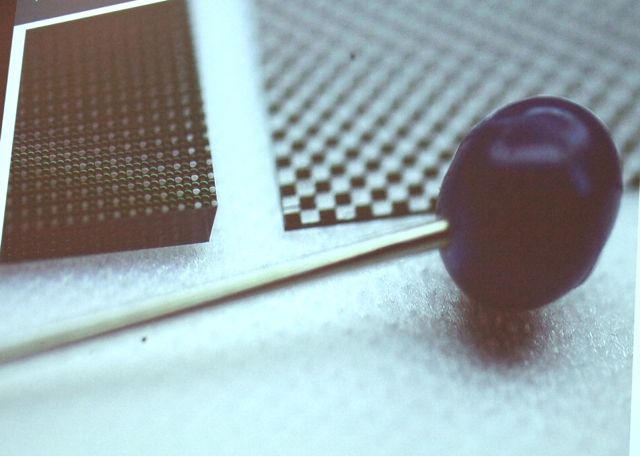

Here's what the plenoptic lens looks like up close. The lens is on the left; that large round object is a pin head:

And here's what it looks like in the camera itself:

By placing the plenoptic lens here, the camera's sensor now records what looks like a bunch of fragmented images, but in reality, each fragment contains more information about individual light rays entering the camera.

Stay in the know with Laptop Mag

Get our in-depth reviews, helpful tips, great deals, and the biggest news stories delivered to your inbox.

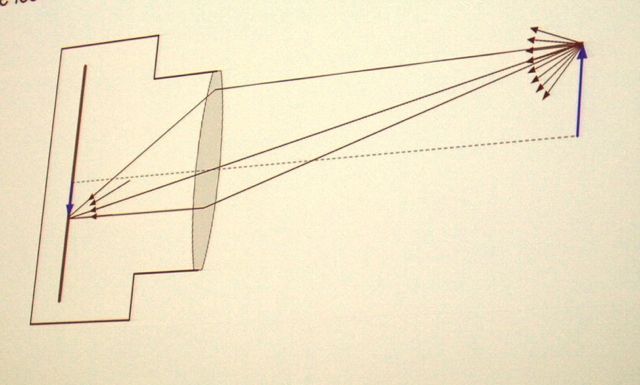

Traditionally, when a camera takes a picture, a ray of light enters the lens and gets recorded on a specific spot, like this:

With a plenoptic lens, that same light ray passes through several lenses before making it to the sensor, so it's getting recorded from several different perspectives.

Because there's all these tiny little lenses in front of the sensor, the resulting image looks like this:

But, using some fancy-type calculations (you can find detailed information about it on Teodor Georgiev's Web site - he's the Senior Research Scientist II, Photoshop Group, at Adobe), they're able to resolve those little fragments into a normal image.

Oops - the girl in the foreground is out of focus. But not really - Georgiev just has it set like that. Basically, all those little lenses give the camera an infinite depth of field, and it's up to the user to decide what he or she wants in focus. As you'll see in the video, he can determine what's in focus, and what's not, by moving a slider in the menu to the left of the image.

All in all, it was a very intriguing demonstration. While the technology has yet to be miniaturized--and the software crammed into the camera itself--it has the potential to be a powerful tool for professional and amateur photographers alike.

Michael was the Reviews Editor at Laptop Mag. During his tenure at Laptop Mag, Michael reviewed some of the best laptops at the time, including notebooks from brands like Acer, Apple, Dell, Lenovo, and Asus. He wrote in-depth, hands-on guides about laptops that defined the world of tech, but he also stepped outside of the laptop world to talk about phones and wearables. He is now the U.S. Editor-in-Chief at our sister site Tom's Guide, where he oversees all evergreen content and the Homes, Smart Home, and Fitness/Wearables categories for the site..