Are the Samsung Galaxy S23 Ultra's incredible Moon shots fake? We tested it ourselves

Which is the hoax? The outrage or the photos?

Samsung's Space Zoom is once again under fire with accusations that the company is "faking" photos of the moon captured by the Galaxy S23 Ultra. This is nothing new, Space Zoom debuted on the Galaxy S20 Ultra in 2020 and the eye-popping 100x zoom that produces crystal clear images of the moon on par with (or better than) far more expensive telescopes or cameras was immediately called into question.

The most recent round of controversy started on Reddit, with a post by user "ibreakphotos" seeking to demonstrate that Samsung's software is applying details to these moon shots that are not present at the time of capture. As I enjoy mobile astrophotography, I wanted to take a closer look at these new claims and clear up some of the confusion around it.

That's no moon (shot)

One problem with this controversy is defining exactly what it would mean to be "faking" the moon shots. Samsung is applying AI and machine learning (ML) to Space Zoom images in order to produce clean photos of the moon. That point isn't under contention.

In a previous deep dive on Space Zoom by Input in 2021 this was covered extensively. Samsung commented that the feature uses Scene Identifier to recognize the Moon based on AI model training and then it reduces blur, noise, and applies Super Resolution processing with multiple captures similar to HDR processing. For what it's worth, Input ultimately concluded that Samsung wasn't "faking" the images of the moon.

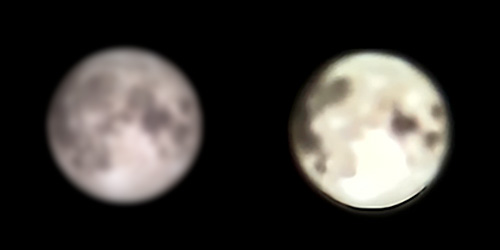

The claim that ibreakphotos makes in their recent Reddit thread is that Samsung is applying textures that are not being captured by the phone to the images. They tested this by using a low resolution (170 x 170 pixel) image of the moon and applying a gaussian blur to eliminate virtually all detail from the shot before shutting off all their lights and snapping a photo of it using Space Zoom. Below is a look at the side-by-side of the source and the final result that they posted.

Obviously, this is a startling result and certainly would support the claims made in the thread as it would be impossible for the phone to resolve the detail shown on the right from the image on the left. However, I have had no luck recreating these results using the same original image and over half a dozen colleagues have also tried and failed to get anything close to this result. One sample side-by-side is below, as you can see it is at best slightly less blurry than the original.

Now this isn't necessarily to say that they are lying about their results, merely that we have been unable to replicate them. We don't have all the variables present in their testing, so perhaps something is throwing it off. Are we on the same version of One UI and Samsung's camera software? Are our rooms slightly brighter or darker than theirs? Did we apply precisely the same amount of gaussian blur to the image? Could the monitor itself yield a difference in the result?

Stay in the know with Laptop Mag

Get our in-depth reviews, helpful tips, great deals, and the biggest news stories delivered to your inbox.

I can only say that in my testing and that of my colleagues, a blurry moon remains blurred. Ultimately, I think the larger question that this raises is how much AI-enhancement do you think is acceptable before a photo is "faked."

Where's the line between "real" and "fake" with AI-enhanced photography?

Computational or AI and ML-enhanced photography has completely transformed mobile photography over the last several years. Google essentially built the Pixel on it with every phone producing images that were far superior to what you would expect based on its hardware. Now every mobile chipset maker focuses heavily on AI performance in order to improve and speed up our phones ability to produce outstanding images (among numerous other AI-based tasks).

Features like portrait mode are giving you results that aren't actually happening in camera. Do you care that the blur is artificial if it gives you a great photo that you want to share? Is that "fake?"

What about HDR modes or Night modes? Those are capturing multiple images and combining them together, once again if the resulting shot is closer to what you wanted to capture, isn't that ultimately the goal?

Taking it further, what about Google's Magic Eraser? You are completely eliminating elements of the photo that you actually captured, but presumably it leaves you with a result that you like better.

Now I can appreciate that there are users that want a photo to capture a scene exactly as they saw it without any embellishment. Samsung's Expert Raw is what you are looking for.

However, the majority of users are just taking snapshots of their lives and the world around them and the brilliance of having an excellent camera on their phone is that it is always on them and that it reliably captures the best photo possible in as short a time as possible.

From what I have seen and what I have been able to reproduce myself, Samsung is heavily applying AI-enhancements to photos of the Moon without replacing them entirely. Whether you deem that "fake" or not is up to you.

Sean Riley has been covering tech professionally for over a decade now. Most of that time was as a freelancer covering varied topics including phones, wearables, tablets, smart home devices, laptops, AR, VR, mobile payments, fintech, and more. Sean is the resident mobile expert at Laptop Mag, specializing in phones and wearables, you'll find plenty of news, reviews, how-to, and opinion pieces on these subjects from him here. But Laptop Mag has also proven a perfect fit for that broad range of interests with reviews and news on the latest laptops, VR games, and computer accessories along with coverage on everything from NFTs to cybersecurity and more.