How Apple Intelligence could fix iOS' biggest flaw

It's not the features of Apple Intelligence that I'm excited about, it's the philosophy

Apple Intelligence, which is rolling out to Apple devices later this month, promises to do a lot: summarize your texts, read your emails, generate emojis, and clean up your rat's nest of Instagram and TikTok notifications.

According to Apple, it's a watershed moment for both the iOS and macOS experience and already (despite not even being fully out yet) a core marketing tactic for Apple's Holy Trinity of gadgets: the iPad, iPhone, and MacBooks, all of which are being billed as "built for Apple Intelligence."

Whether Apple Intelligence delivers on its promises or not, it's clearly "The Next Big Thing." But, for me, it's not specifically what Apple Intelligence is offering to do for the future of next-gen devices that excites — it's what Apple's big push into AI could do to bring back the past.

A new way to iOS

iOS has changed a lot over the years.

For almost two decades, Apple's mobile operating system garnered a reputation as being the more user-friendly, streamlined alternative to Android, and for many years, that reputation — at least in my opinion — was warranted.

Apple's simplified UI and clean design worked in tandem with perks like a super-responsive touchscreen and thoughtful, native apps to create a device that felt intuitive.

Sign up to receive The Snapshot, a free special dispatch from Laptop Mag, in your inbox.

But time has a way of shifting even the most ingrained perceptions, and as the years have passed, what once felt like simplicity has given way to the opposite — bloat.

The urge to add complexity to iOS has its benefits, of course — you can do more with today's software than you ever could before — but it also creates logistical issues with UI. Adding more features means you have to devise a way to squeeze all of them in, and sometimes the places where those features are squeezed into aren't exactly ideal.

As a result, you've got iOS capabilities buried so deep that you might not even know they exist. If I asked you how to make your texts send in "invisible ink" would you know how to? How about scheduling text messages for a future date and time? If I asked you to make a virtual mood board with your iPhone would you know which app to use?

Some iPhone users might know the answers to all of those questions, but just as many wouldn't. That's likely a product of feature bloat, and what results is an increasingly cluttered experience that feels less like the iOS of yore.

But what if there was another way to glue all of the many features of iOS together? That's where Apple Intelligence comes in.

An AI for UI

Apple Intelligence isn't just a set of new AI features, it's a potentially new way to use your device.

For example: instead of manually asking your device to do things by tapping through your iOS interface on a touchscreen, Apple Intelligence might be able to preempt tasks before you even ask. Glimmers of that philosophy are seen in features like summaries, which assess your incoming notifications and present you with only the most important ones.

The idea here is straightforward: notifications are messy, and if AI can clean them up for you, iPhone users will have a better experience. It's a somewhat new idea, but that philosophy is unlikely to stop there.

There are a lot of different applications for AI right now, image and video generation to name a couple, but one of the most enticing is also in some ways the most boring. The concept of AI agents, for example, may not immediately scream "The future is nigh," but if the idea pans out, it could be pivotal.

AI agents, if you're unfamiliar, are exactly what they sound like, bots capable of adeptly taking over tasks that might otherwise be done manually by a human. While purveyors of AI agents like Microsoft have positioned them mostly as tools for business, it's not hard to see how they could be translated for personal use.

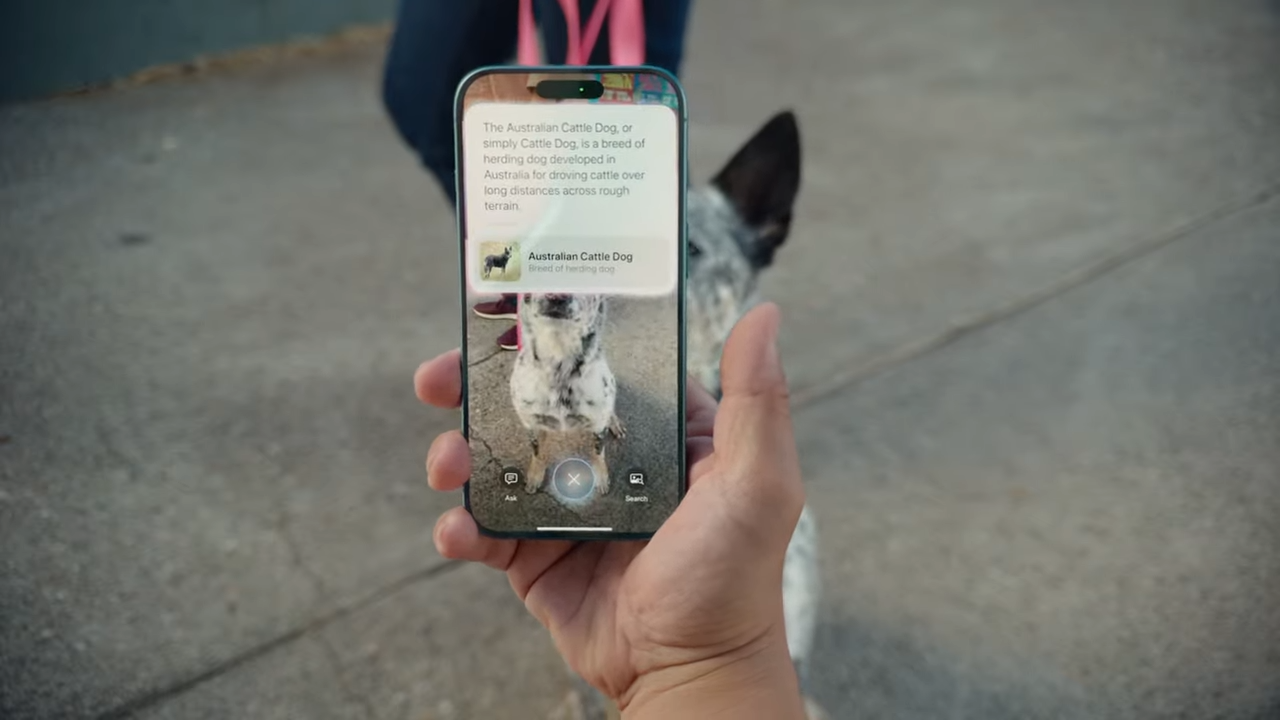

While Apple Intelligence might not include an AI agent specifically, the seeds are there. An AI-supercharged Siri, for example, is a major precursor. Apple says its new Siri voice assistant, which is imbued with large language model (LLM) capabilities thanks to an OpeanAI partnership, will be a lot more sophisticated in its ability to understand commands.

AI agents, for example, may not immediately scream "The future is nigh," but if the idea pans out, it could be pivotal.

LLMs are decidedly much better at understanding natural language and, as a result, have an easier time carrying out a wider range of tasks, even those that are multistep and have more than one command in a single prompt.

It's still early days for Apple Intelligence, but it wouldn't be shocking to see Siri become more of an interface for using your iPhone — a realization of the original plan for voice assistants — and Apple's own AI agent, so to speak.

The AI agent philosophy extends beyond that: maybe it means Apple Intelligence learning your habits and suggesting apps, activities, or features based on your habits. Maybe it just means a better voice assistant in Siri; one that's ready to meet your commands more adeptly and offers a wider range of ability.

Either way, the philosophy is baked into Apple Intelligence in features (nascent or not) and philosophy — and this is just the beginning.

Getting smarter

Before Apple Intelligence can be considered a revolution in UI, it has to be functional. It's hard to say how far along Apple is in achieving that baseline without using the features first, but if Apple Intelligence is anything like other AI from Google or Microsoft, it will be a bit of trial and error.

Apple Intelligence might be the future, but maybe the secret to its success lies in the past.

That's to say LLMs on phones won't have an impact overnight, but phone makers already have their gears turning about how they might in the future. Carl Pei, CEO of Nothing, for example, has gone on record talking about how AI might offer an entirely new way of interacting with your device — one that could end our reliance on apps and finally bring us into a new age of ambient computing.

Similarly, for years now, Google has completely redefined what a picture on a smartphone can and should be through AI-intensive features like Magic Eraser and Best Take, which use AI to heavily alter photos on your phone after you've taken them.

Apple still has to figure out what its own thing is when it comes to AI, but I'd like to see everything come back to the basics. iOS, in my opinion, still sets the bar for mobile operating systems, but through years of dilution, its strengths don't seem quite as pronounced.

That doesn't mean it has to stay that way, however. Apple Intelligence might be the future, but maybe the secret to its success lies in the past.

James is Senior News Editor for Laptop Mag. He previously covered technology at Inverse and Input. He's written about everything from AI, to phones, and electric mobility and likes to make unlistenable rock music with GarageBand in his downtime. Outside of work, you can find him roving New York City on a never-ending quest to find the cheapest dive bar.